Market Pulse: Can AMD take on Nvidia in AI?

In 5 minutes, understand how AMD is poised to challenge Nvidia in the AI chips market and whether it can become a serious competitor.

In 5 minutes, understand how AMD is poised to challenge Nvidia in the AI chips market and whether it can become a serious competitor.

The AI boom has caused Nvidia’s revenue to skyrocket this year, and skyrocket isn’t an exaggeration: the company’s revenue grew +101% year-over-year in Q2 and +206% year-over-year in Q3!

The main reason for this is that when the AI boom started late last year, the company had by far the best chips for AI and there just wasn’t any competition. If your company was serious about AI, you had to buy Nvidia’s chips.

AMD wants to change this, and it’s making rapid progress.

Last week, AMD launched its new MI300 AI chip series that it claims performs better than Nvidia’s flagship H100 AI chip. Specifically, the MI300X chip is supposedly on par with the H100 for AI model training and beats the H100 by 10-20% for AI inference.

With the launch of the new MI300 AI chip series, it appears AMD has significantly narrowed the gap with Nvidia in AI chip technology. Although it’s too early to make a definitive statement, AMD appears to be strongly positioned to experience a surge in revenue and margins growth that’s akin to what Nvidia experienced this year. We think that the market has only just started pricing this possibility in and AMD’s stock is likely undervalued.

However, it’s also important to note that AMD’s own projections show that it has a lot of catching up to do and this thesis needs a few years to play out. More on this in the The Details section below.

If the MI300 AI chip series eventually proves to significantly narrow AMD’s AI tech gap with Nvidia, here are two main reasons why AMD’s stock is undervalued relative to Nvidia’s:

First, Nvidia has a 26 Price-to-Sales multiple while AMD only has a 9.8 Price-to-Sales multiple.

Second, unlike Nvidia, AMD’s data center revenue has been tepid this year, giving it room for significant upside if AI chips sales rapidly ramp up in the near-term.

The Details

Caution, AMD’s AI story will take a few years to play out

Nvidia's AI data center business has a huge head start on AMD and the latter’s own projections show that it’s still a few years out with catching up (at least for now). Nvidia’s data center revenue business grew from $4.28B in Q1 this year to $17.5B in Q3 while AMD, even with the launch of the MI300X, projects just $2B in GPU data center revenue for all of next year.

That’s a Godzilla-sized revenue hole that AMD needs to fill to catch up.

Other challenges: impending H200 launch and the software gap

Even as the MI300X appears to match or slightly exceed the H100’s performance, Nvidia plans to launch its next generation AI chip, the H200, in Q2 2024. This makes AMD roughly one generation behind Nvidia in AI chip development.

In addition, AMD also has a large gap to bridge in terms of software support and adoption for its AI chips. Nvidia’s software CUDA is widely adopted in the industry and customers can easily find an army of engineers that know how to use CUDA to tune AI workloads for Nvidia’s GPUs. The same can’t be said for AMD’s ROCm software.

No China risk is good

Nvidia’s sky-high valuation is currently threatened by a shrinking China business, which accounts for roughly a quarter of its data center revenue, as the Biden administration ramps up semiconductor export sanctions.

In fact, US Commerce Secretary Gina Raimondo recently warned Nvidia, “if you redesign a chip around a particular cut line that enables them to do AI, I’m going to control it the very next day”. Other quotes from Raimondo from the same event include: “We cannot let China get these chips. Period. We’re going to deny them our most cutting-edge technology” and “I know there are CEOs of chip companies in this audience who were a little cranky with me when I did that because you’re losing revenue. Such is life. Protecting our national security matters more than short-term revenue.”

In this respect, AMD has a surprising strength relative to Nvidia in that its significantly smaller data center business has less exposure to China, making it more immune to worsening semiconductor export sanctions.

More on the MI300X chip

As mentioned above, according to AMD, the MI300X is on par with the H100 for AI model training and beats the H100 by 10-20% for AI inference.

In terms of raw specs, the MI300X dominates the H100 with 30% more FP8 FLOPS, 60% more memory bandwidth, and more than 2x the memory capacity.

Although AMD didn’t disclose the price of the new chips, massive market demand for AI chips have propelled H100 prices to the stratosphere with some reporting that each chip could sell for more than $30,000. These absurd prices give AMD significant room to undercut Nvidia.

As for MI300X launch customers, AMD’s managed to gather an impressive lineup including:

OpenAI (for its Triton 3.0 project)

Microsoft (Azure)

Meta (“AI inference workloads such as processing AI stickers, image editing, and operating its assistant”)

Oracle (will use the chip to power its upcoming generative AI cloud service)

OEMs (Original Equipment Manufacturer): Dell, HP, Lenovo, and Supermicro

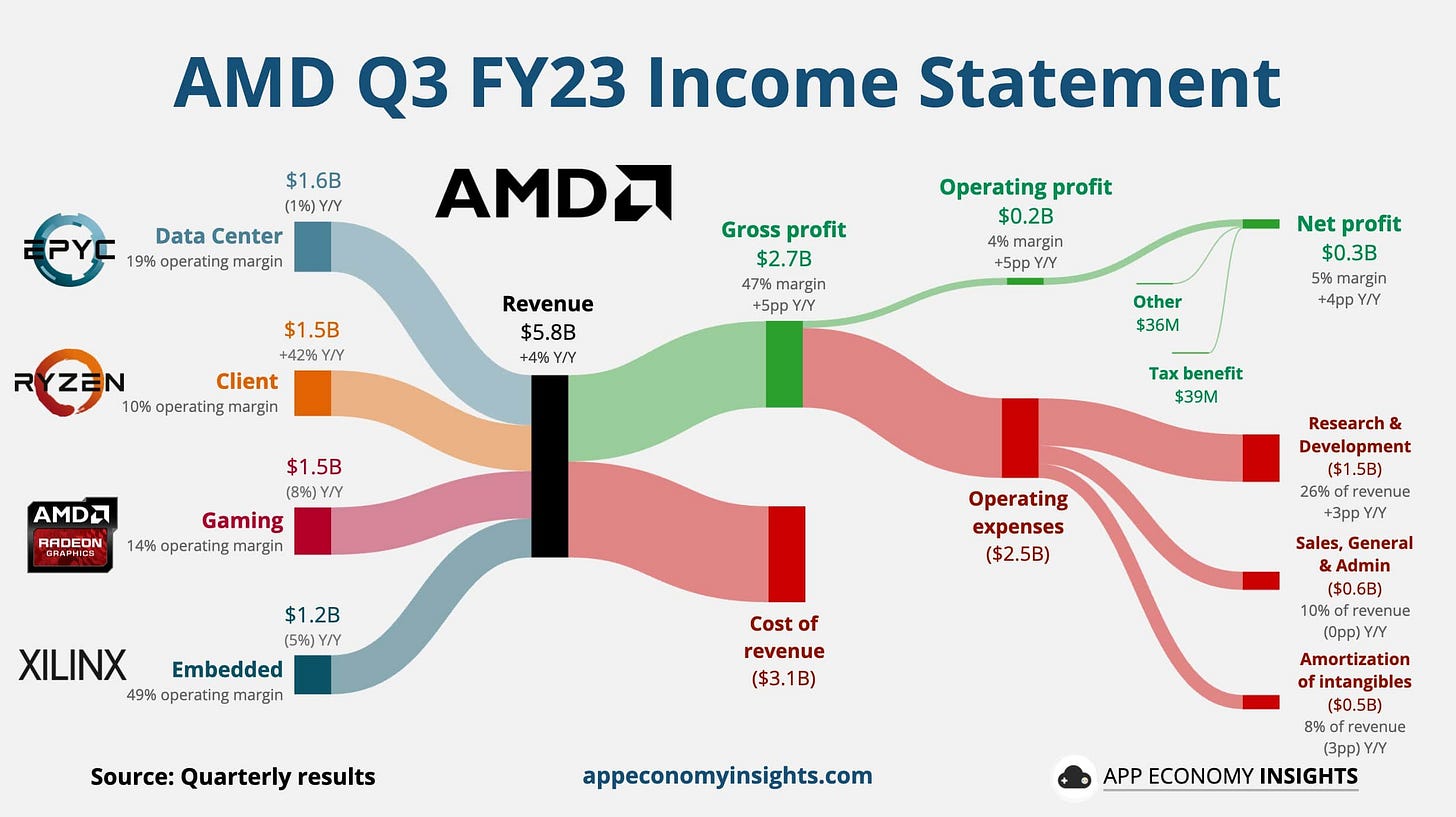

AMD’s Q3 income statement visualized